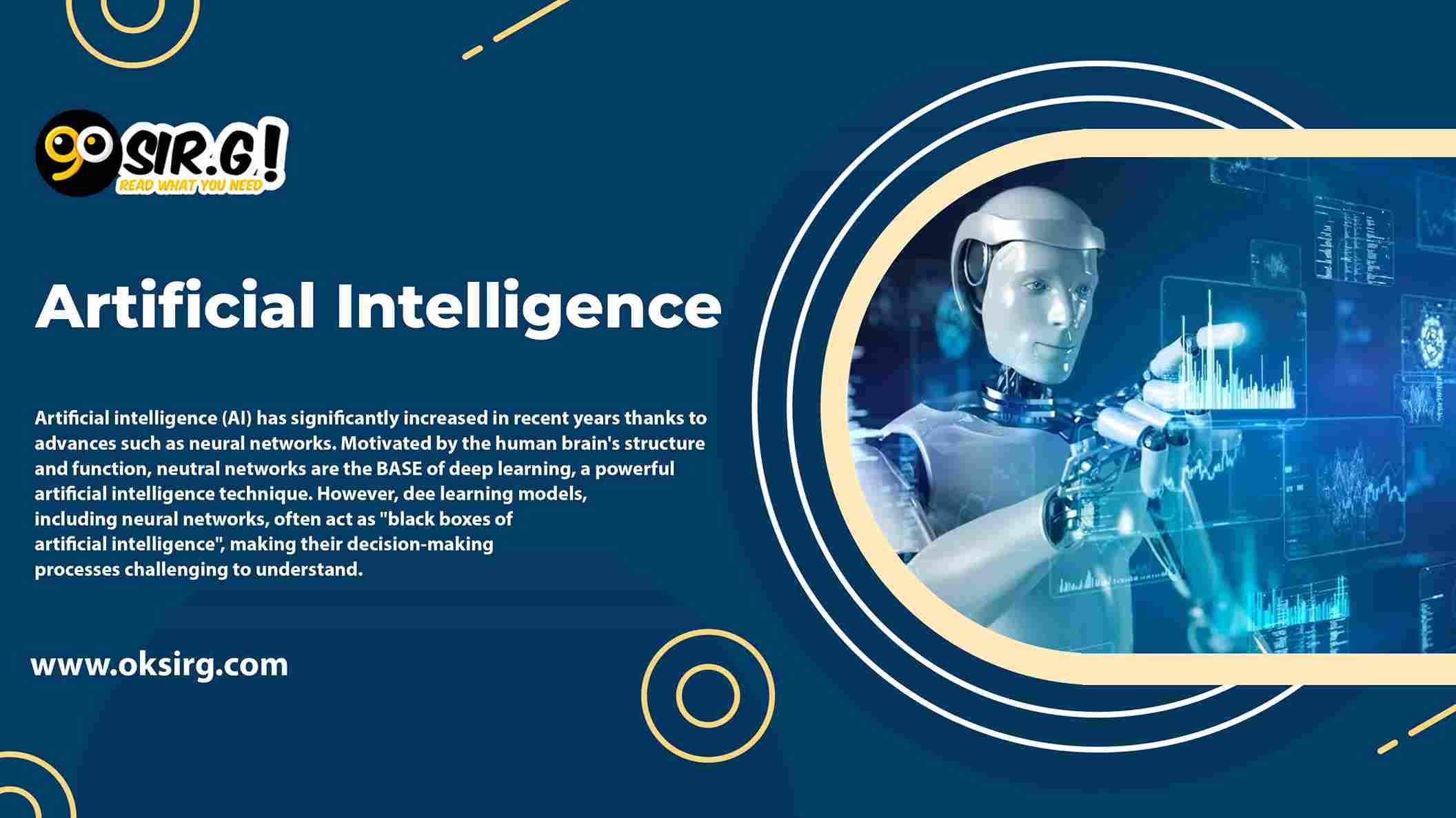

Artificial intelligence (AI) has significantly increased in recent years thanks to advances such as neural networks. Motivated by the human brain’s structure and function, neural networks are the BASE of deep learning, a powerful artificial intelligence technique. However, deep learning models, including neural networks, often act as “black boxes of artificial intelligence“, making their decision-making processes challenging to understand.

Artificial intelligence (AI) has evolved rapidly, revolutionizing various industries and opening up new possibilities. Two significant advances in AI, namely deep learning and explainable AI- the power of neural networks, have played critical roles in shaping this field. Furthermore, we will examine these advances and their impact on the scientific and technological landscape.

Deep Learning: Unlocking The Power Of Neural Networks:

Deep learning has become a revolutionary approach to artificial intelligence. It allows computers to learn and make decisions like the human brain. This technique uses artificial neural networks motivated by the structure and functionality of the human brain to process vast amounts of data and extract complex patterns and representations.

Understanding neural networks:

Neural networks consist of interconnected layers of artificial neurons that mimic neurons in the human brain. Each neuron takes an input, applies a mathematical function, and passes the output to the next level. Furthermore, in a learning process, neural networks learn from labelled data and adjust the weights and biases of neurons to improve their prediction accuracy.

Reinforcement of various applications:

Deep learning has revolutionized many applications, including computer vision, natural language processing, and speech recognition. It has enabled breakthroughs in image classification, object recognition, language translation, and voice assistants. Moreover, thanks to deep learning algorithms, these applications are more precise, efficient, and able to handle complex tasks.

Explainable AI: Bridging The Gap Between AI And Human Understanding:

The need for transparency and interpretability has grown as artificial intelligence systems become more complex and powerful. Explainable AI (XAI) develops AI models and techniques that clearly explain their decisions and actions. Furthermore, it ensures users can understand and trust their results.

The challenge of the black boxes of artificial intelligence:

Traditional AI models, like deep neural networks, often act as black boxes of artificial intelligence, making it difficult to understand how they arrive at their conclusions. This lack of visibility is a concern, especially in ana applications such as healthcare, finance, and autonomous systems, where decisions impact people’s lives.

Promote transparency and trust:

Explainable AI solves these problems by developing methods to interpret and explain the decision-making processes of AI models. Techniques such as rule mining, attention mechanisms, and feature importance analysis shed light on the underlying factors affecting the performance of the AI system. XAI promotes transparency, trust, and accountability in AI applications by providing insights.

The Unity Between Deep Learning And Artificial Intelligence Is Understandable:

Deep learning and explainable AI are not mutually entire; on the contrary. They complement each other to improve AI as a whole ecosystem. Deep understanding provides the power and precision needed to solve complex problems while explainable AI. It ensures those solutions are transparent, interpretable, and reliable.

- Ethical considerations

The unity between deep learning and explainable AI answers the ethical questions surrounding the spread of AI. It enables organizations to adhere to ethics, compliance, and integrity policies by explaining AI decisions clearly, mitigating bias, and ensuring accountability.

- Improved user acceptance

Explainable AI also plays a crucial role in user adoption. By explaining AI results, users gain a deeper understanding of the system’s reasoning, increasing trust and acceptance of AI-based solutions.

Deep learning and explainable AI are two significant advances in AI. Deep learning has unlocked the power of neural networks and enabled AI systems to learn and execute complex tasks with pinpoint accuracy. Explainable AI, on the other hand, bridges the gap between AI and human understanding by providing explanations for AI decisions and promoting transparency, trust, and the ethical use of AI. Moreover, collectively, these advances are shaping the future of AI, allowing us to realize the potential of

while ensuring responsible and responsible execution across the board.

Artificial intelligence: Frequently asked questions about AI

What is artificial intelligence AI?

Artificial intelligence AI is a shape of computer science that simulates human intelligence through machine learning to perform data analysis, natural language processing, etc.

Why are we so afraid of AI?

The advent of artificial intelligence has created feelings of insecurity, fear, and hatred of technology that most people don’t fully understand. AI can automate tasks previously reserved for humans, such as writing an essay, organizing an event, and learning another language. However, experts fear the era of unregulated AI systems will lead to disinformation, cybersecurity threats, job losses, and political bias.

Leave feedback about this

You must be logged in to post a comment.